Researchers at the University of California have unveiled a groundbreaking AI-powered system capable of restoring natural speech to paralyzed individuals using their own voices. This advanced technology, demonstrated in a clinical trial, allows patients who are unable to speak to communicate effectively in real time. By combining brain-computer interfaces (BCIs) with sophisticated AI techniques, this innovation marks a significant leap in assistive technology for individuals with severe speech impairments.

| Article Subheadings |

|---|

| 1) Overview of the AI Technology |

| 2) Mechanism Behind the Innovation |

| 3) Achievements in Real-Time Speech Synthesis |

| 4) Overcoming Historical Challenges |

| 5) Future Prospects for AI and BCIs |

Overview of the AI Technology

The recent developments in AI technology stem from collaborative research efforts conducted at UC Berkeley and UC San Francisco. These institutions have combined their expertise to create an AI-based system that interprets brain signals and translates them into audible speech. This novel approach aims to provide speech to individuals who have lost their vocal abilities due to paralysis or other neurological conditions. By utilizing a clinical trial participant and various advanced technologies, researchers demonstrated the profound impact that this system can have on improving the quality of life for those affected.

The aim of this technology is not simply to create any speech, but to enable real-time communication that mimics the natural voice of the individual. The team of researchers, led by co-principal investigators, has emphasized the importance of personalizing speech output to reflect the individual’s unique voice characteristics. For those individuals, the ability to communicate effectively can significantly enhance their emotional well-being and overall quality of life. Clearly, this innovation could provide profound social and psychological benefits for patients, allowing them to engage more fully with family and friends.

Mechanism Behind the Innovation

The technology integrates various components, including high-density electrode arrays that record neural activity directly from the surface of the brain. It also employs microelectrodes that penetrate the brain’s surface, complemented by non-invasive electromyography sensors on the face to measure muscle activity associated with speech production. This multifaceted approach allows the system to tap into the brain’s communication pathways.

The core of the system involves sampling data from the brain’s motor cortex, which controls speech production. The AI algorithms are trained to decode this neural data and translate it into recognizable speech. The study’s co-lead author, Cheol Jun Cho, explained that this system intercepts brain signals at the articulation stage, where the brain is converting thought into words. The ability to translate these signals into speech reflects a significant advancement in the field of brain-computer interfacing.

Achievements in Real-Time Speech Synthesis

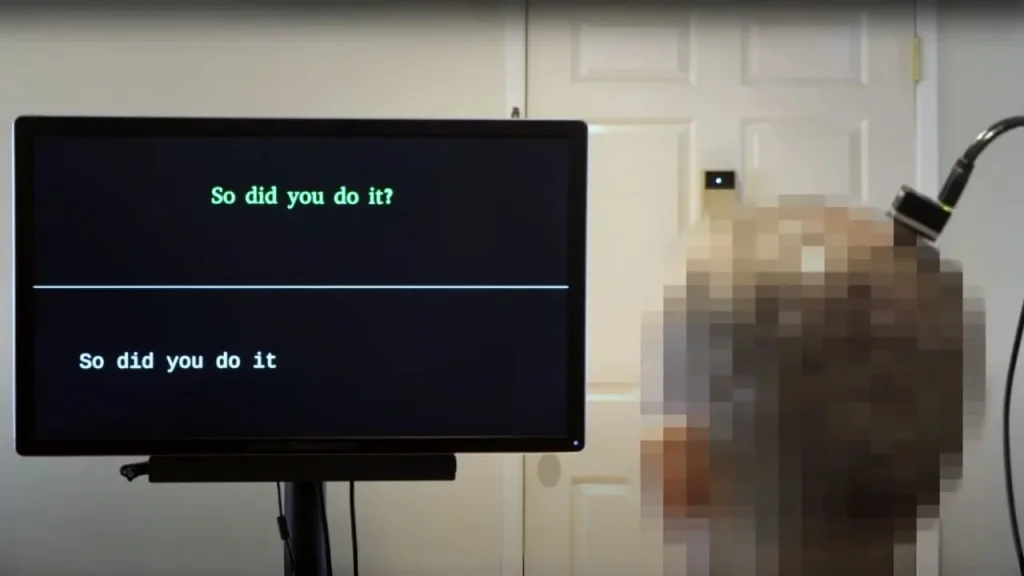

A major breakthrough of this technology is its real-time speech synthesis capabilities. Unlike earlier systems that struggled with latency, the new model can decode and synthesize speech almost instantaneously—within an astonishing 80 milliseconds. This advancement solves previous delays common in speech-to-text technologies and allows for a more natural flow of conversation, which is critical for effective communication.

Furthermore, the production of naturalistic speech also stands out as a major achievement. The AI system can replicate a patient’s vocal characteristics, allowing for personalized communication that sounds like the individual before they lost their ability to speak. For patients with no residual vocalization, researchers have implemented a pre-trained text-to-speech model guided by recordings of the individual’s voice prior to their condition, thereby preserving their unique voice identity.

Overcoming Historical Challenges

Historically, one of the significant hurdles for BCIs has been accurately mapping neural data to speech output, especially when patients do not retain any vocal capabilities. The implementation of a pre-trained text-to-speech model specifically tailored to the individual’s pre-injury voice has been a revolutionary solution to this problem. This innovation not only maintains vocal integrity but also ensures that the synthesized speech closely resembles how the person would have communicated prior to their condition.

The developments in this technology showcase the importance of continuous research and ethical considerations in the field of neuroprosthetics. Collaborations among researchers, medical professionals, and technology developers are proving essential as they push the boundaries of what’s possible with BCIs. By overcoming these critical challenges, the initiative provides renewed hope for individuals grappling with severe impairments, reflecting the transformative potential lurking behind advanced technologies.

Future Prospects for AI and BCIs

Looking ahead, researchers are optimistic about refining this technology further. Future goals include increasing the AI performance, especially in the areas of expressiveness and emotional tone within synthesized speech. The aim is to enhance communication not just in terms of words spoken but also in terms of emotional delivery—pitch, loudness, and other paralinguistic features that convey nuanced meanings in speech.

The long-term implications of this research extend beyond merely providing speech. If successful, this technology has the potential to fundamentally alter the way healthcare professionals interact with patients who have lost their ability to communicate due to conditions such as ALS, musculoskeletal diseases, or severe stroke. As such systems become more prevalent, they could redefine patient support and rehabilitation strategies, enhancing social integration and emotional health among affected individuals.

| No. | Key Points |

|---|---|

| 1 | The AI system restores speech in real-time using the patient’s own voice. |

| 2 | Significant advancements in speech synthesis have led to a nearly instantaneous response. |

| 3 | The technology overcomes historical challenges of neural mapping and vocal preservation. |

| 4 | Future research aims to enhance emotional expressiveness in synthesized speech. |

| 5 | Potential to transform communication and emotional well-being for individuals with speech impairments. |

Summary

The development of an AI-driven system that restores speech using patients’ own voices is a pioneering achievement in the field of neuroprosthetics. The technology promises to make significant strides in enhancing communication for individuals with severe speech impairments. While challenges remain, particularly regarding expressiveness and processing accuracy, the potential benefits for users are profound, fostering a return to meaningful interaction and improved quality of life.

Frequently Asked Questions

Question: How does the AI system restore speech?

The AI system restores speech by interpreting neural signals from the brain and translating them into audible speech using the patient’s own voice.

Question: What are the key components of this technology?

Key components include high-density electrode arrays that capture neural activity, microelectrodes for penetrating the brain’s surface, and surface electromyography sensors for measuring facial muscle activity.

Question: What future advancements are anticipated in this research?

Future advancements aim to refine the AI’s capability to convey emotional tone and expressiveness in synthesized speech, thus improving the overall quality of communication for users.