Artificial Intelligence (AI) technology is rapidly evolving, producing hyperrealistic “digital twins” of notable public figures, including politicians and celebrities. This advancement raises critical issues around legality and the rights of individuals whose images and voices are manipulated without consent. As celebrities like Scarlett Johansson voice their concerns, the discussion surrounding the implications of deepfake technology intensifies, particularly as the United States prepares to enhance its lead in AI development against competing nations.

| Article Subheadings |

|---|

| 1) The Rise of Deepfake Technology |

| 2) Celebrity Concerns: A Case Study |

| 3) The Legislative Landscape |

| 4) Maintaining Technological Leadership |

| 5) The Future of AI and Autonomous Driving |

The Rise of Deepfake Technology

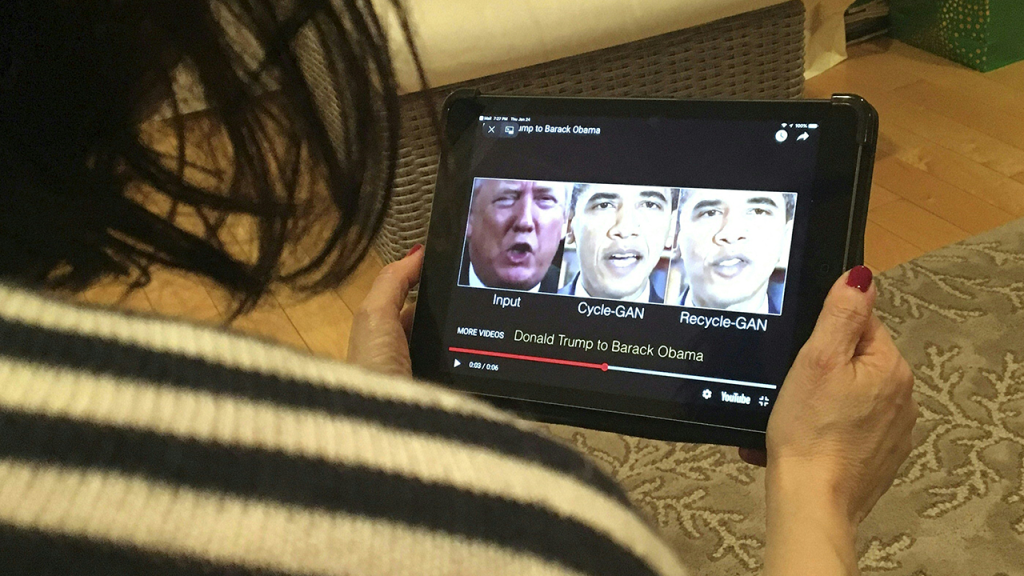

Deepfake technology has emerged as a groundbreaking yet controversial development in the field of artificial intelligence. Using neural network algorithms, deepfake applications can create strikingly realistic videos and audio of individuals, manipulating how they express thoughts and emotions in a given scenario. This phenomenon raises significant ethical dilemmas as the line between realism and fabrication becomes blurred. The capabilities of this technology are not merely confined to entertainment; they extend to potential uses in disinformation campaigns and public manipulation. These developments pose grave concerns for personal privacy and the integrity of information that can distort public perception.

The public first became aware of deepfake technology’s potential through viral videos and memes that showcased how easily a person’s likeness could be recreated or altered. As technology has advanced, instances of deepfakes have transcended benign entertainment purposes, entering contentious discussions about fake news and the spread of misinformation in political arenas, where altered videos of politicians can drastically impact public opinion. This duality of entertainment versus misinformation has destabilized the credibility of visual media, compelling audiences to question what is real and what is fabricated.

Celebrity Concerns: A Case Study

One of the most vocal critics of deepfake technology is Scarlett Johansson. Her concerns echo a broader sentiment among celebrities regarding the unauthorized use of their likenesses in various AI-generated contexts. Johansson’s experience stems from her likeness being used without her consent in AI applications, prompting her to address the potential dangers of AI in a society increasingly defined by digital interactions. In a recent interview, she emphasized, “If that can happen to me, how are we going to protect ourselves from this?” This statement underlines the pressing need for clearer boundaries and regulations concerning the use of AI in media.

Johansson’s comments reflect the views of numerous artists who worry about their intellectual property and personal branding being exploited without their endorsement. Moreover, these instances raise questions concerning the legal frameworks needed to protect individuals from unsolicited use of their images and identities, emphasizing the urgent need for thought leadership on these issues within the entertainment and tech industries.

The Legislative Landscape

As deepfake technology proliferates, lawmakers and legal scholars are beginning to grapple with formulating appropriate regulations to combat the risks associated with this technology. Currently, the legal landscape is fragmented, with different states adopting varied approaches to tackle the implications of deepfakes. Some jurisdictions are enacting laws specifically addressing the malicious use of deepfakes, particularly concerning non-consensual pornography and political misinformation.

Despite these efforts, many argue that existing legal frameworks are inadequate to manage the complexities introduced by digital twins. Furthermore, the rapid pace of AI advancements often outstrips the capacity of legal systems to respond effectively. Stakeholders ranging from legal experts to technology developers are emphasizing the importance of collaborative approaches to ensure that laws remain up-to-date with evolving technologies while safeguarding both personal privacy and freedom of expression.

Maintaining Technological Leadership

In response to the growing threat posed by global competitors, including China, the U.S. government is taking significant steps to preserve its leadership in AI advancements. Recently, officials have reached out to organizations like OpenAI to gather information and create comprehensive strategies to enhance the United States’ technological edge. This initiative includes the proposal of an “AI Action Plan” aimed at fostering innovations that will keep U.S. businesses and technologies at the forefront of the AI landscape.

This move is particularly crucial given the competitive atmosphere surrounding AI technology. With nations around the world investing heavily in AI and machine learning capabilities, the U.S. is intent on ensuring it does not fall behind. The collaboration with industry leaders aims to not only spearhead technological advancements but also establish ethical guidelines for their implementation, addressing concerns raised by individuals and organizations alike.

The Future of AI and Autonomous Driving

As AI technology continues to evolve, its applications are expanding beyond media and entertainment, leading to excitement in various sectors, especially transportation. Companies such as Stellantis are working on advancements in autonomous driving technology, hoping to change how people commute in the near future. Their STLA AutoDrive 1.0 system is designed to deliver a highly automated driving experience that allows drivers to focus on other activities during transit without the need to monitor the road constantly.

This new vision of transportation promises to reshape daily routines, yet it also raises questions about safety, regulatory oversight, and technological reliability. The evolution of autonomous vehicles must coincide with comprehensive legal standards to protect drivers and passengers while simultaneously fostering innovation. As industry and government collaborate, the challenge will be to balance the pursuit of innovation with the safeguarding of public interests, ensuring that advancements contribute positively to society.

| No. | Key Points |

|---|---|

| 1 | Deepfake technology is growing rapidly and poses significant ethical dilemmas. |

| 2 | Celebrities like Scarlett Johansson are raising concerns about unauthorized use of their images. |

| 3 | The legal landscape surrounding deepfakes is fragmented and requires clearer regulations. |

| 4 | The U.S. government aims to maintain AI dominance amidst global competition. |

| 5 | Advancements in autonomous driving technology are set to change commuting experiences. |

Summary

In conclusion, the evolution of AI technologies, particularly deepfakes, presents burgeoning challenges to personal privacy and the integrity of information. As concerns rise among public figures regarding unauthorized use of their likenesses, the need for clear legal frameworks becomes increasingly apparent. Simultaneously, the United States is actively working to strengthen its leadership in AI against international competitors, promising innovations that redefine transportation. The conversation on how to responsibly integrate these advancements continues to develop, highlighting the importance of a balanced approach to technological innovation and ethical standards.

Frequently Asked Questions

Question: What is deepfake technology?

Deepfake technology uses artificial intelligence to create realistic but fake images, videos, or audio of real people by manipulating existing media.

Question: How does deepfake technology affect public perception?

Deepfakes can distort public perception by presenting false narratives or misleading information, particularly in political contexts where manipulated videos can influence voter opinions.

Question: What steps are being taken to regulate AI technologies?

Lawmakers are working on creating laws aimed at protecting individuals from malicious uses of deepfake technology and ensuring ethical practices in AI development.