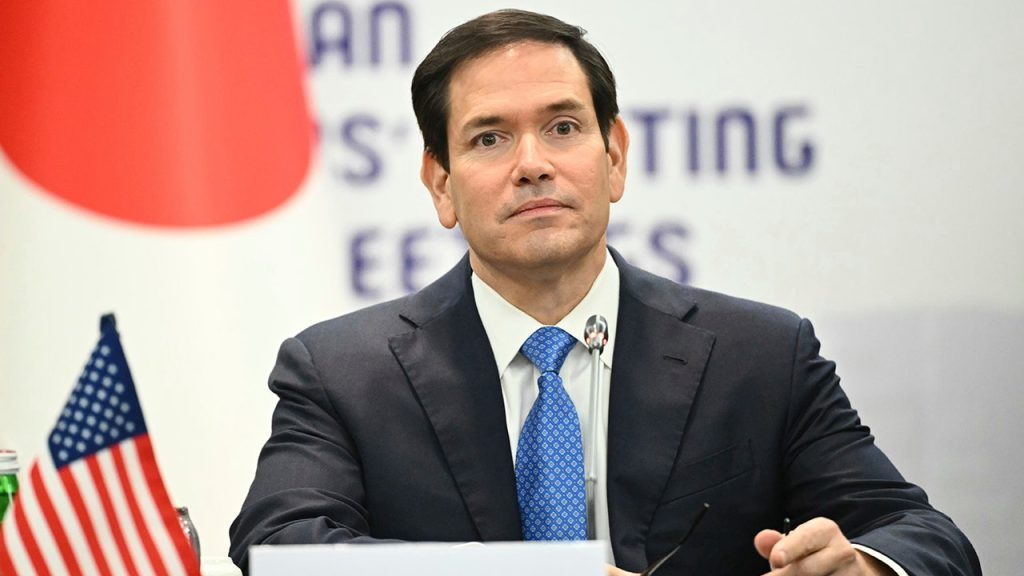

The U.S. State Department is currently investigating a bizarre case involving an impersonator claiming to be Secretary of State Marco Rubio, utilizing advanced artificial intelligence technology to deceive government officials. This incident raises serious concerns about security protocols and the potential for digital impersonation in diplomacy. As AI continues to develop at a rapid pace, it underscores the pressing need for robust regulations to mitigate risks associated with such technological advancements.

| Article Subheadings |

|---|

| 1) The Incident: Digital Impersonation Uncovered |

| 2) Behind the Technology: Understanding AI’s Role |

| 3) Implications for National Security |

| 4) The Global Response to AI Misuse |

| 5) Future of AI Regulation |

The Incident: Digital Impersonation Uncovered

In a startling event, officials have reported that an individual managed to impersonate Secretary of State Marco Rubio through the use of sophisticated AI technologies. This impersonation involved communicating with both U.S. and foreign officials, raising alarm bells at the highest levels of government. In late June 2025, the State Department’s security team became aware of unusual communications that appeared to originate from Rubio, leading to an internal investigation.

This was not merely a case of miscommunication; the impersonator effectively used AI tools to replicate Rubio’s voice and communication style. Reports indicate that these messages were designed to affect negotiations and discussions critical to U.S. foreign policy. The impersonator’s actions exemplify a chilling scenario that underscores the vulnerabilities present in current geopolitical frameworks.

The investigation is ongoing, with the State Department collaborating with cyberspace specialists and intelligence agencies to trace the source of the deception. The findings may have broad implications that extend beyond just this one case. Moreover, this incident has sparked a comprehensive conversation about the risks associated with AI technologies that can be exploited for malicious purposes.

Behind the Technology: Understanding AI’s Role

To fully grasp the implications of this incident, it is essential to understand the technology that enabled it. The impersonator’s use of AI likely involved machine learning algorithms designed to analyze and replicate human voice and speech patterns. This technology has rapidly advanced in recent years, allowing even relatively inexperienced individuals to create convincing fake audio and video clips.

AI can simulate not just voice but also facial expressions and body language, with developments in deepfake technology amplifying the potential for misuse. By utilizing these technologies, the impersonator could craft messages that were nearly indistinguishable from genuine communications, creating further challenges for those managing diplomatic relations.

While the technologies themselves can foster innovation and efficiency across various sectors, their potential for misuse highlights the need for ethical considerations and responsible development practices. Understanding the mechanics of such technology is crucial to developing countermeasures that can thwart malicious applications.

Implications for National Security

The ramifications of AI impersonation extend into the realm of national security. As the use of AI in various sectors becomes more prevalent, the threat of deepfakes and impersonation could lead to substantial risks, especially in diplomatic contexts. With the ability to manipulate communication, adversaries can undermine trust among nations and potentially disrupt critical negotiations.

Governmental bodies across the globe are increasingly concerned about what such technologies enable. This incident involving Marco Rubio serves as a wake-up call, prompting calls for immediate action to bolster existing cybersecurity frameworks. Diplomats and policymakers must be aware of these technological tools and implement strategies for verification to avoid being misled by manipulated content.

Evolving threats call for a response that includes educational initiatives focused on digital literacy, alongside technological solutions like enhanced authentication measures for communications involving state officials. National security agencies are emphasizing the importance of vigilance in order to maintain the integrity of international relations.

The Global Response to AI Misuse

The impersonation incident has not only attracted attention within the United States but is also being closely monitored by global partners. Countries around the world are beginning to reevaluate their own security protocols in light of such events. The potential for AI technologies to influence political stability and international relations is significant.

In response, several international coalitions are forming to address the challenges posed by advanced digital technologies. These collaborations aim to draft guidelines and best practices for the ethical use of AI. Various nations are actively participating in discussions designed to mitigate the risks posed by disinformation, which can escalate conflicts and create distrust among allied nations.

In this global push for better governance of AI technologies, the incident involving Marco Rubio has acted as a catalyst, prompting urgent discussions and active collaboration among nations. It highlights the necessity of a united approach to face the challenges brought about by technological advancements.

Future of AI Regulation

With the rapid evolution of AI technologies, the question of regulation becomes paramount. Lawmakers and tech experts are grappling with how to balance innovation with the need for security. This incident has sparked discussions about how to draft comprehensive legislation that keeps pace with technological advancements while safeguarding national and global interests.

Emerging regulatory frameworks emphasize preventative measures and the importance of transparency in AI applications. For instance, implementing systems that allow for the identification and verification of AI-generated content can be crucial. Additionally, fostering a culture of accountability in both technology developers and users could work to deter potential misuse.

In this evolving landscape, it is essential for governments to collaborate with tech companies and cybersecurity experts to establish standards that can minimize risks. The future of AI regulation will likely entail international treaties governing the ethical use of AI technologies, reflecting the interconnectedness of today’s global landscape.

| No. | Key Points |

|---|---|

| 1 | The State Department is investigating an impersonation case involving Secretary of State Marco Rubio. |

| 2 | Advanced AI technology enabled the impersonator to convincingly mimic Rubio‘s voice and communication style. |

| 3 | The incident raises serious national security implications regarding the use of AI in diplomatic communications. |

| 4 | International discussions regarding AI ethics and standards are becoming increasingly urgent. |

| 5 | Future AI regulation will require collaboration among governments, tech companies, and cybersecurity experts. |

Summary

The impersonation of Secretary of State Marco Rubio through AI technology has brought to light critical vulnerabilities within diplomatic communications. This incident serves as a reminder of the pressing challenges posed by rapidly evolving AI technologies and the necessity for robust regulatory measures. As governments and international coalitions work to address these issues, both ethical considerations and security protocols must evolve to safeguard against potential misuse in the future.

Frequently Asked Questions

Question: What technologies were used in the impersonation incident?

The impersonation involved advanced AI technologies that can replicate human voice and speech patterns, including deepfake technology.

Question: Why is this incident significant for national security?

It highlights vulnerabilities in diplomatic communications and the potential for AI-generated misinformation to undermine international relations.

Question: What steps are being considered for AI regulation?

Lawmakers are discussing comprehensive regulatory frameworks that include preventative measures, transparency, and international collaborations to manage the ethical use of AI technologies.